Unlocking Streaming Data Pipelines on Google Cloud Platform

Learn how DataCater runs streaming data pipelines on GCP.

Learn how DataCater runs streaming data pipelines on GCP.

You are running your data architecture on the Google Cloud Platform (GCP) and want to start using streaming data pipelines? Maybe you want to empower your data ingestion layer with real-time capabilities or allow data consumers to always work with fresh data?

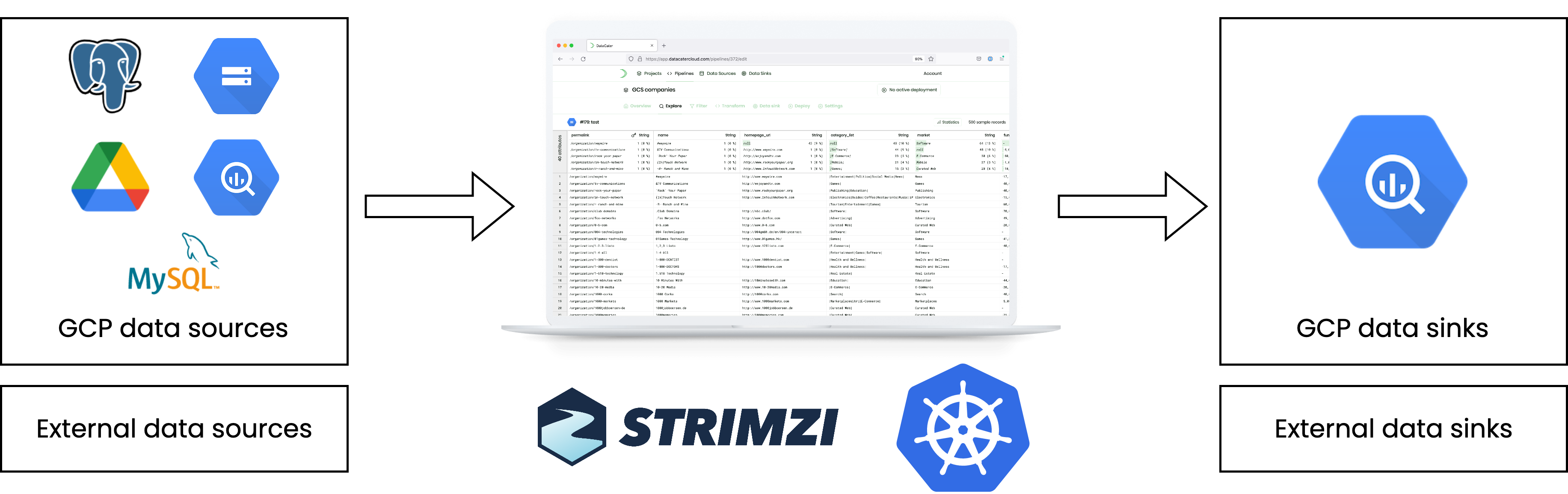

In this article, we show how we tailored the Kubernetes-based deployment of DataCater to the Google Cloud Platform, making self-service streaming data pipelines available on GCP.

In detail, we will …

discuss how DataCater leverages the Google Kubernetes Engine (GKE) as compute and intermediary storage layer for data pipelines,

show how immutable data pipeline revisions can be managed in Google Cloud's Container Registry,

highlight Cloud SQL's support for log-based change data capture (CDC),

and present how change data capture can be used with other data sources, like Google Cloud Storage (GCS), Google Drive and put into Google Cloud BigQuery for fresh Analytics data.

While batch data pipelines perform recurring bulk loads, streaming data pipelines treat data as continuous flows. Streaming data pipelines capture changes from data sources in real-time, process these changes instantly, and publish them to downstream data sinks.

They are not only loved by data consumers, which are able to work with fresh data at any time, but also preferred in cloud computing. As discussed in the whitepaper on cloud-native data pipelines, the continuous workloads of streaming data pipelines can benefit from the elastic scaling of Kubernetes much more than bulk loads.

DataCater uses Kubernetes as foundational execution engine for data pipelines. It compiles data pipelines, built with its no-code pipeline designer or defined as declarative YAML files, to streaming applications, which are packaged as container images and deployed on a Kubernetes cluster.

Kubernetes is the omnipresent cloud-native platform and helps DataCater to adapt to load changes elastically - both on the cluster and pipeline level.

Being the original builders of Kubernetes, Google Cloud offers an excellent managed Kubernetes service, Google Kubernetes Engine (GKE). For us, it was a clear choice to deploy DataCater on GKE.

In addition to Kubernetes, DataCater builds on top of the Apache Kafka ecosystem. Strimzi is a great technology for operating Apache Kafka and Apache Kafka Connect on a Kubernetes cluster. We recommend Strimzi for deploying Apache Kafka on GCP.

DataCater's installation on GKE has a couple of adaptions to integrate best with GCP. For instance, DataCater uses an External HTTP(S) load balancer for ingress and manages certificates natively with GKE's networking features via a ManagedCertificate. Managed certificates are enabled if you use Google Cloud DNS. GCP's load balancer allows for all sorts of declarative configuration of connectivity. For reference, please have a look at the GKE Ingress Features.

Container images are the building blocks in Kubernetes. For each pipeline revision, DataCater creates an immutable container image.

For the management of container images, DataCater uses Google Cloud's Container Registry when deployed on GCP.

The Container Registry allows DataCater to store container images close to the place of compute, i.e., the Kubernetes cluster, which benefits the startup time of pipeline deployments.

At DataCater, we breathe change data capture. All of our source connectors implement change data capture to some degree, allowing to extract incremental updates from data sources. For database systems, log-based change data capture is the most efficient and straightforward implementation of CDC.

GCP Cloud SQL offers a fully-managed hosting of the database systems MySQL, PostgreSQL, and SQL Server.

For all three database systems, Cloud SQL supports log-based change data capture, which allows DataCater to extract data from Cloud SQL databases efficiently.

When connecting to a Cloud SQL database instance from a GCP-hosted DataCater installation, we recommend the usage of the Cloud SQL Proxy for a secure authentication.

Speaking of change data capture, DataCater does not only offer CDC source connectors for database systems but also for file systems and object stores.

Google Drive and Google Cloud Storage are popular services for managing flat files in formats like CSV, JSON, or XML.

DataCater's source connectors can monitor a configured Google Drive folder or GCS bucket for updates. Once a new or updated file has been detected, DataCater extracts its content in (near) real-time, might transform it, and streams it to downstream data sinks, like Google Cloud BigQuery.

Google Cloud BigQuery is one of the few cloud data warehouses with true support for streaming inserts, allowing DataCater to stream data (change events) from GCP data sources, like Cloud SQL, Google Drive, or Google Cloud Storage, in real-time to BigQuery tables. This makes BigQuery the perfect data sink in your GCP environment.

But ... Did you know that you can use BigQuery as a data source, too? DataCater offers a source connector for Google Cloud BigQuery. Although BigQuery does not offer replication logs, like Cloud SQL, DataCater is able to employ query-based CDC for extracting data changes from BigQuery tables.

From our experience, most use cases employing BigQuery as a data source do not stream data to another GCP service but use external data sinks, like an external REST API.

Nowadays, many people refer to the usage of a cloud data warehouse as a data source as Reverse ETL. To us it's just another data pipeline.

We briefly sketched how DataCater integrates with the different offerings of the Google Cloud Platform to make self-service streaming data pipelines available on GCP. The Google Kubernetes Engine serves as the foundational compute layer for data pipelines. We even recommend to run Apache Kafka and Apache Kafka Connect on top of GKE. Change data capture can be used with many of GCP's data systems - from Cloud SQL databases to Google Cloud Storage and Google Cloud BigQuery. In the next couple of weeks, we will open-source our Helm chart, offering a plug-and-play installation of DataCater on GKE. Stay tuned!

Want to learn more about the concepts behind cloud-native data pipelines? Download our technical white paper for free!