Using Apache Kafka in development and test environments

Learn how to set up Apache Kafka for your development and test environments.

Learn how to set up Apache Kafka for your development and test environments.

Apache Kafka is an open-source data streaming platform for working with data in motion. More than 80% of all Fortune 100 companies trust Apache Kafka and use it to implement use cases and applications, such as email notification systems, fraud detection systems, and others.

In this article, I discuss how to set up a Kafka cluster for testing and development purposes. Since GDPR requires businesses to protect personal data during transactions and data processing, it’s necessary to take a second look at how to set up a test or development environment for Apache Kafka. I discuss different ways to anonymize personal data and comply with GDPR rules.

In this section, I take a look at different approaches to configuring a Kafka cluster for a development or test environment and provide the advantages and disadvantages of each approach.

In many cases, engineering teams use local machines to develop and build software applications and services, before rolling them out to a shared staging or pre-production environment. They might prefer to set up a Kafka test cluster on a local machine, using tools such as Docker Compose.

Unfortunately setting up a Kafka test cluster on the localhost server might not produce the best results, for the following reasons:

While a local Kafka cluster on a developer machine might be easier to set up, especially when using prepared Docker Compose manifests, a Kafka test environment on a shared cluster overrides the technical limitation of the local approach due to the following:

When comparing local with shared clusters, shared clusters (typically) provide better performance and can be used for working with real data. The latter might not always be possible without taking care of data privacy rules. In the following section, I discuss how to mask or anonymize production data for development and test purposes.

Mirroring production data to a local Kafka cluster on a developer machine is hard, which is why synthetic data is typically recommended for local setups. Also, it’s possible to generate synthetic data locally without internet access, making it feasible here.

For shared test or development clusters running on remote servers, we can decide between generating synthetic data or replicating real data from the production environment.

For generating synthetic data, the open-source community provides several tools, such as Python Faker or AnonymizeDF. While this makes generating synthetic data sets straightforward, synthetic data still have the downside that they merely reflect real-world data, making it hard to test applications in a realistic way.

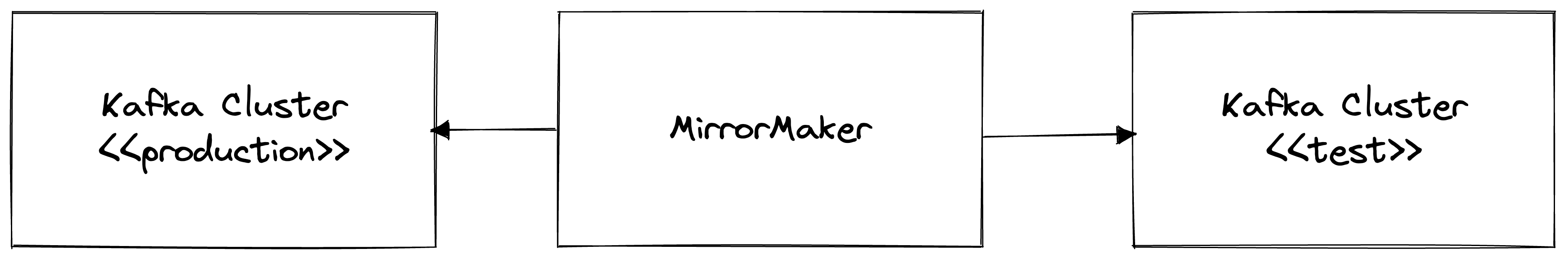

Replicating data from production environments to a shared development or test cluster can be handled with tools, such as Kafka’s own MirrorMaker. MirrorMaker sits between different Kafka cluster, as shown in the diagram below, and replicates topics byte by byte:

Under the hood, MirrorMaker is powered by Kafka Connect, another product from the Apache Kafka project, to ease configuration and scalability.

Most companies work with customer data. Thus, in most cases, we cannot simply copy data from production to test environments but need to make sure that all sensitive information are removed before the data leave the production environment. In the following sections, I introduce two approaches for masking data, using ksqlDB (SQL) and DataCater (Python).

ksqlDB provides the MASK() function for obfuscating PII or personal data.

Let’s assume that our production cluster features the Kafka topic user_accounts, holding records in the JSON format with three fields.

In ksqlDB, we can create a STREAM out of the existing topic as shown below:

CREATE STREAM users_account

(fullname CHAR, account_name VARCHAR, cardnum VARCHAR)

WITH (kafka_topic='users_account', partitions=2, value_format='json');

Once we defined the STREAM, we can build the following SQL query for masking the cardnum field:

CREATE STREAM masked_user_acounts AS

SELECT

fullname, accountname, MASK(cardnum) AS cardnum

FROM users_account

EMIT CHANGES;

You can verify that PII data have been masked correctly by querying the stream

masked_user_accounts:

SELECT * FROM masked_user_accounts EMIT CHANGES;

Another option for dealing with PII-related columns would be excluding them. This is only viable if you do not need them in downstream processing.

DataCater is one of the few data streaming platforms that supports Python transformations. Python is a very powerful programming language and can be applied to a lot of use cases.

For masking data, Python’s support for regular expressions is very handy.

For instance, we might want to replace all digits in a field, which holds a Mastercard number. We can use Python’s re.sub function to (1) search for all substrings matching a regular expression and (2) replace the matches with an X:

def transform(field, row):

return re.sub(

r"^(5[1-5][0-9]{14}|2(22[1-9][0-9]{12}|2[3-9][0-9]{13}|[3-6][0-9]{14}|7[0-1][0-9]{13}|720[0-9]{12}))$",

"XXX-XXXX-XXXX",

field)

In addition to the presented usage of regular expressions, there exist dedicated Python modules for data masking. Check this page to find out how scrubdub masks sensitive data/values.

Testcontainers is a Java library that makes containers accessible in unit tests. It allows to dynamically spin up data services, like Apache Kafka or PostgreSQL, from within your Java code. Testcontainers allow to implement use cases, such as data access layer integration, application integration tests, or acceptance tests.

Let’s imagine that we built a stream processing application based on Apache Kafka and we want to develop unit tests for verifying the correctness of the different components in an automated manner. Prior to the availability of testcontainers, you would typically spin up a local Apache Kafka cluster inside the workflow of your CI pipeline and run your tests against this instance. This would require handling the management of the Kafka cluster outside of your unit tests. With Testcontainers you can start a Kafka cluster, using the configuration of your choice, from within your unit tests and directly access it in Java, easing the management of Kafka a lot.

Testcontainers provides the class KafkContainer, which you can use to start a Kafka instance using a specific image version with just one line of code as shown below:

KafkaContainer kafka = new KafkaContainer(DockerImageName.parse("confluentinc/cp-kafka:6.2.1"));

Testcontainers are mainly useful for automated software tests, where you typically use synthetic data, while shared test clusters are the preferred choice when performing manual (acceptance) tests or when working with (masked) production data.